A Brief History of Electrical Technology

Part 5: The Integrated Circuit and the Arpanet

by piero scaruffi | Contact/Email | Table of Contents

Timeline of Computing | Timeline of A.I.The Technologies of the Future | Intelligence is not Artificial | History of Silicon Valley

The Integrated Circuit(Copyright © 2016 Piero Scaruffi)

The early transistors were made of germanium. It was only in 1954 that both Morris Tanenbaum's team at Bell Labs (in January) and Gordon Teal's team at Texas Instruments (in May) produced silicon transistors. The one made by Texas Instruments was the first silicon transistor to be commercially available (in June). Teal had been hired in 1953 from Bell Labs, one year after T.I. purchased the transistor license from AT&T, and his research was funded by T.I.'s merge with the bigger and richer firm Intercontinental Rubber. Both germanium and silicon are semiconductors, but silicon is easily found in nature (in sand all over the world), unlike germanium. Germanium works well at low temperatures, but silicon works better at the higher temperatures typical of guided missiles, and Texas Instruments was still keen on serving the military market with new technology after the end of the Korean War. Teal's team led the shift from germanium to silicon transistors after an impressive demonstration at a conference in Dayton (Ohio) in May 1954.

Before the end of the year Philco, Raytheon, RCA, and Hughes joined the ranks of the silicon transistor, but only T.I. had one actually for sale. In 1955 Texas Instruments was the world's leader in transistors, and Transitron was second in the USA. They owned about 35% of the domestic market.

The story of the diffused silicon transistor (the kind that eventually won) is similar: Bell Labs came first in the lab (Tanenbaum again, in 1955, after his colleague Calvin Fuller had developed diffusion process the previous year), but T.I. was first to market it: Elmer Wolff's 2N389 in 1957. Vaccum tubes sill dominated the electronics industry: 1.3 billion vacuum tubes were sold in the USA between 1954 and 1956, compared with 17 million germanium transistors and 11 million silicon transistors. But the feeling that semiconductor-based transistors could replace vacuum tubes got stronger. The Cold War demanded something better than vacuum tubes that were unreliable and cumbersome to guide the nuclear missiles in a potential nuclear war. After the transistor, William Shockley worked on a totally different invention, which in 1952 he called "Electrooptical Control System": an electronic eye for industrial robots (several years before the first robot was deployed at General Motors). And then he moved to California to be near his ailing mother, divorcing his wife in 1955.

In September 1955 Arnold Beckman (owner of Beckman Instruments, a company based in Los Angeles) financed him to open a research center for transistors in Palo Alto, the Shockley Semiconductor Laboratory.

Shockley, using his prestige (soon to be reinforced by the Nobel prize), hired young local engineers, most of them still in their 20s, like Philco's physicist Robert Noyce (an MIT graduate) from Philadelphia, Johns Hopkins University chemist Gordon Moore (a Caltech graduate) from Maryland(but actually the only one born and raised in the Bay Area), Austrian-born Western Electric industrial engineer Eugene Kleiner (a New York University graduate) from New Jersey, Swiss-born Caltech physicist Jean Hoerni (who had studied philosophy at Cambridge University) from Los Angeles, Dow Chemical's metallurgist Sheldon Roberts (another MIT graduate) from Michigan, Western Electric's mechanical engineer Julius Blank from New Jersey, MIT physicist Jay Last (who had just graduated) from Boston, and Stanford Research Institute's physicist Victor Grinich (the only Stanford graduate). Shockley was skilled at assembling teams, as he had demonstrated at Bell Labs. However, he was not as good at motivating his staff. In October 1957 these eight engineers, fed up with his management, were introduced by a young New York investment banker, Arthur Rock, to the very rich Sherman Fairchild, a manufacturer of aerial cameras and small airplanes and, in particular, owner of Fairchild Camera and Instrument. Sherman Fairchild was even richer than Tom Watson because he was his father George's only son, whereas Tom Watson was one of four children of his father Thomas. Fairchild funded the new startup and Fairchild Semiconductor was born in Mountain View, with the mission to develop silicon transistors, Shockley's original plan. Fairchild Semiconductor, more than Hewlett-Packard, foreshadowed the stereotypical Silicon Valley startup.

The competition was tough: Transitron, Raytheon, Motorola, Texas Instruments, Philco, RCA, General Electric, Sylvania/GTE, ... Fairchild's first big contract in 1958 was facilitated by Sherman Fairchild: 100 silicon transistors for IBM. And they came up with the first "double-diffusion" transistor. This led to the next big contract. Autonetics, a division of North American Aviation in Los Angeles, was a leader in inertial navigation systems, having pioneered the inertial autonavigator in 1950 and built a transistorized computer for the guidance system of the Navaho missile in 1955. The largest military project of the time was the Minuteman guided missile and Autonetics reasoned that Fairchild's "double-diffusion" transistors were ideal for it. Ten years after the invention of the transistor the technology to make electrical circuits had evolved. The printed circuit board had been invented in 1936 in Britain by an Austrian-born Jew who had escaped Austria after Hitler's invasion, Paul Eisler, an electrical engineer with experience in the printing business. Technically an illegal alien, Eisler built the first radio made with a printed circuit board in 1940 while he was in prison. He started making the first commercial printed-circuit boards in 1943 for Technograph.

During World War II Harry Rubinstein from the Centralab Division of Wisconsin-based military contractor Globe-Union (originally founded in 1925 to make car batteries and radio equipment) perfected the printed-circuit board for anti-aircraft devices, and after the war Globe-Union became the industrial leader in printed circuits for radio and television sets, hiring the best electrical engineers of the region (such as Jack Kilby, who graduated in 1947 from University of Illinois).

In 1949 two engineers of the US army, Moe Abramson and Stanislaus Danko, developed the Auto-Sembly process, and that's when transistors and printed circuits came together.

In 1952 Globe-Union (and, in particular, Jack Kilby) attended the famous Bell Labs symposium on transistor applications. DEC was founded in 1957 not to make computers but to make circuit modules that could be used for many industrial applications and that were also used to build MIT's LINC in 1962. An Wang's business before building calculators was to sell "Logi-blocs", i.e. circuit boards.

Companies such as T.I., Fairchild and DEC were operating at the border of printing, photography, miniaturization and even urban planning: a circuit board was an increasingly small printed photography of the electronic equivalent of a city block. Jack Kilby joined T.I. in 1958. His idea was to make all electronic components of a module out of the same block of material, silicon being the material of choice at T.I., even if some components (e.g. resistors) were much cheaper to make in other materials (e.g. carbon). In September 1958 Kilby built his first integrated circuit (actually made of germanium).

With Kilby's technique multiple transistors could be integrated on a single layer of semiconductor material. Previously, transistors had to be individually carved out of silicon or germanium, and then wired together with the other components of the circuit. This was a difficult, time-consuming and error-prone task that was mostly done manually. Putting all the electrical components of a circuit on a silicon or germanium "wafer" the size of a fingernail greatly simplified the process and heralded the era of mass production. Fairchild Semiconductor improved Kilby's idea. In March 1959 Jean Hoerni invented the planar process that enabled great precision in silicon components.

Then Noyce developed a planar integrated circuit, thereby inventing photolithography, the technology to produce many transistors from a single chunk of silicon. Hoerni's planar technique is what really made integrated circuits practical and efficient because it allowed a machine to make the connection at the same time that the components were made. The planar process was easier with silicon than with germanium, and that eventually led to the demise of germanium. Hoerni's planar process enabled the mass production of chips and can be credited with inventing the semiconductors industry as it came to be. Fairchild introduced the 2N1613 planar transistor commercially in April 1960, and the first commercial single-chip integrated circuit in 1961 (the Fairchild 900), a few months after the Texas Instruments SN502.

Ironically, despite the great developments of the electronic industry on the East and West Coast, the inventors of the integrated circuit were both raised in the Midwest: Kilby was raised in Kansas and Noyce in Iowa. The integrated circuit was the product of a Midwestern, Great Plains, farming culture. The motivation to package multiple transistors into the same chip arose due to the fact that the wiring had become the real cost. Both Fairchild and Texas Instruments had improved the process of printing the electronic chips, but each chip contained only one transistor and the wiring ran outside the chip. Progress in the wiring was not keeping pace with progress in printing, and therefore the wiring was becoming the real cost. The integrated circuit was saving money. For the record, this wasn't really "silicon" valley yet: almost all the transistors made in the world were still made of germanium, and this would still be true throughout the early 1960s. The munificent benefactor of integrated circuits was the Air Force, that was engaged in building ever more sophisticated and reliable ballistic missiles. The MIT's Instrumentation Laboratory led by Charles Draper designed the guidance system for the ballistic missile Polaris (deployed in 1960) and was the first lab to experiment with Texas Instruments' integrated circuits. In 1961 Harvey Cragon of Texas Instruments demonstrated to the Air Force a tiny computer made of 587 integrated circuits (a "molecular electronic computer") with financial support from Richard Alberts of the Air Force Electronic Components Laboratory in Dayton (Ohio), and that demo convinced the military establishment.

The Air Force's next big project was the Minutemen missile system (deployed in 1962). In 1962 Autonetics built the D-17B, the first computer made of integrated circuits, for the guidance system of this missile, as the Air Force was beginning to rebuild the logic of the Minutemen using integrated circuits; and in 1964 the Minutemen II debuted, the first high-tech weapon equipped with integrated circuits. Its integrated circuits were made by Texas Instruments and Westinghouse (where the Hungarian-born inventor George Sziklai had put together a team to work on silicon transistors and where the Chinese-born HungChang Lin designed the first analog integrated circuit). In 1963 there was no other customer than the US military.

By 1964 T.I. had sold 100,000 integrated circuits to the Air Force. The story was not any different in Britain, where in 1961 Ferranti developed its integrated circuits for the British navy. Fairchild, instead, steered away from military contracts. The second benefactor was NASA. In May 1961 US president John Kennedy set out a national goal of putting man on the Moon by 1969. NASA launched three manned projects. The first one, the Mercury capsule, that carried only one astronaut, built by McDonnell-Douglas, had no computer. For the Gemini capsule NASA commissioned IBM a computer, the Gemini Digital Computer, a monster that was basically an evolution of SAGE. The guidance and navigation computer for the Apollo project was developed, starting in 1961, by the MIT Instrumentation Laboratory. It was assigned to the MIT because the IBM Digital Computer did not use integrated circuits, Noyce was an MIT graduate and convinced the MIT to use Fairchild's integrated circuits at the end of 1962. Fairchild licensed its technology to Philco of Philadelphia, which in 1964 was chosen by NASA to supply the thousands of integrated circuits used in the Apollo Guidance Computers. The Apollo guidance system, built by Raytheon using about 5,000 Philco integrated circuits, debuted in 1965.

NASA also helped Texas Instruments. Bob Cook designed T.I.' first planar integrated circuit for the Optical Aspect Computer on NASA's Interplanetary Monitoring Probe of 1963. These were the first integrated circuits to leave planet Earth. By 1965 the Air Force's Minuteman project overtook again NASA's Apollo project as the largest single buyer of integrated circuits. Thanks to the simulataneous Minutemen II and Apollo projects, the price of integrated circuits dropped dramatically (from $120 in 1961 to $25 in 1971), making it possible for computer makers to incorporate integrated circuits in commercial computers. In 1965 RCA and SDS introduced the first computers made with integrated circuits: RCA's Spectra 70 and the SDS 925.

Another early manufacturer of minicomputers made with integrated circuits was Systems Engineering Laboratories, founded in Florida in 1961 (a spin-off of a manufacturer of space and military equipment called Radiation), that was selling the SEL 810.

Meanwhile, Fairchild excelled at hiring the best talents around. Just in 1959 they hired: Don Farina (from Sperry Gyroscope), James Nall (from the National Bureau of Standards), Bob Norman (from Sperry Gyroscope), Don Valentine (from Raytheon), and Charles Sporck (from General Electric). Later came Jerry Sanders (1961, from Motorola), Pierre Lamond (1962, from Transitron), Jack Gifford (1963, just graduated from UCLA), Mike Markkula (1966, from Hughes), etc.

However, the eight founders of Fairchild Semiconductors made the same mistake that Shockley made: they upset their employees and caused an exodus. A number of engineers (led by David Allison) believed that the firm should have focused more on integrated circuits and in 1961 left Fairchild to start Signetics. Signetics benefited from the decision in 1963 by the Department of Defense to push for architectures based on integrated circuits. Throughout 1964 Signetics dwarfed Fairchild and anybody else in the manufacturing of integrated circuits. In fact, Fairchild may be more important in the history of the semiconductor industry for the engineers that it trained than for the technology it invented, because many of those engineers ended up starting more innovative firms. Since the early days Robert Noyce introduced an unorthodox management style at Fairchild Semiconductor, treating team members as family members, disposing of the suit-and-tie dress code, and inaugurating a more casual and egalitarian work environment; a style that he probably inherited from his old boss Carlo Bocciarelli at Philco. The government, and the military in particular, was no less important than the inventors because initially the semiconductor industry depended almost entirely on government contracts. Those contracts not only kept the semiconductor industry alive but prompted it to invest in continuous improvements and are the very reason that prices kept declining. As prices declined, commercial applications became possible. An early supporter of the Bay Area's semiconductor industry was Seymour Cray at Control Data Corporation (CDC), who had built the first large transistor computer in 1960 (the CDC 1604). Cray sponsored research at Fairchild that resulted (in July 1961) in a transistor made of silicon that was faster than any transistor ever made of germanium. Cray's new "super-computer", the CDC 6600, employed 600,000 silicon transistors made by Fairchild. The integrated circuit was also sponsored by NASA for its Apollo mission to send a man to the Moon. In August 1961 NASA, that had been using analog computers, commissioned the MIT's Instrumentation Lab to build a digital computer. The Apollo Guidance Computer (AGC) became the first computer to use integrated circuits, each unit made with more than 4,000 Fairchild integrated circuits (the Micrologic series). In 1964 NASA switched to Philco's integrated circuits, thus turning Philco into a semiconductor giant and enabling it to buy General Microelectronics. Progress at Fairchild was rapid, and mainly due to two new employees from the Midwest: Dave Talbert (hired in 1962) and Bob Widlar (hired in 1963). In 1963 Widlar (a wildly eccentric character who had served in the air force) produced the first single-chip "op-amp".

In 1964 Talbert and Widlar created the first practical analog (or "linear") integrated circuit that opened a whole new world of applications. HungChang Lin had already built one in 1963 at Westinghouse for Autonetics' Minuteman guidance system, but the products that spread around the world were Fairchild's microA702 of 1964 and the microA709 of 1965. Besides, Talbert's and Widlar's work set the standard for design of semiconductor devices.

TTL ("Transistor-Transistor Logic") had probably been invented in 1961 by James Buie at a Los Angeles startup called Pacific Semiconductors, a few months after the introduction of Fairchild's Micrologic (a simplified kind of Resistor-Transistor Logic or RTL), but the firm never released a TTL product. In 1962 Signetics introduced its DTL ("Diode-Transistor Logic") product Utilogic, and Fairchild followed suite with the DTL 930. In 1963 Sylvania introduced the first commercial TTL integrated circuits, the Universal High-Level Logic family (SUHL), developed by Thomas Longo, a physicist hired from Purdue University who in 1962 had created the first gigahertz transistor, the Sylvania 2N2784.

TTL quickly became the standard for integrated circuits among semiconductor manufacturers, helped by the fact that Litton (a Los Angeles-based military contractor) selected Sylvania's TTL technology for the guidance computer of the first long-range air-to-air missile, the AIM-54 Phoenix. In 1964 Jerry Luecke at Texas Instruments designed the TTL series 5400. In 1966 Longo moved to Transitron and designed their 16-bit TTL RAM. A Metal-Oxide Semiconductor (MOS) element consists of three layers: a conducting electrode (metal), an insulating substance (typically, glass), and the semiconducting layer (typically, silicon). In 1963 several Fairchild engineers, including Robert Norman, Don Farina and Phil Ferguson, founded General Microelectronics (GMe) to focus on MOS technology. In 1964 GMe introduced the first commercial MOS integrated circuit, designed by Norman. MOS circuits deliver low power consumption, low heat and high density, making it possible to squeeze hundreds of transistors on a chip, and eventually MOS allowed engineers to achieve a much higher density of electronic components: the same function that required ten TTL chips could be implemented in one MOS chip. TTL uses Bipolar Junction Transistors (BJT), MOS uses MOS Field-Effect Transistors (MOSFET) which are unipolar. Someone at Fairchild had already pushed beyond MOS. In 1963 Frank Wanlass, a fresh graduate from the University of Utah, invented Complementary Metal-Oxide Semiconductor (CMOS), a new way to manufacture integrated circuits. Depending on whether the semiconductor has been "doped" with electrons (n-type) or holes (p-type), the MOS circuit can be nMOS or pMOS. CMOS, by combining both types in appropriate complementary symmetry configurations, greatly reduced current flows. Thanks to CMOS, it became possible to drop semiconductors into digital watches and pocket calculators. Wanlass quit Fairchild in December 1963 to join General Microelectronics (GMe), and then he quit again after just one year and moved to the East Coast, and then back to his native Utah. The gospel of CMOS spread thanks to Wanlass' continuous job changes and to his willingness to evangelize.

TTL and CMOS coexisted for a long time. CMOS was generally better but more expensive and more fragile. This is where old adding-machine technology wed the new MOS technology. Victor and Felt (now renamed the Comptometer Corporation) were old-fashioned calculator companies. In 1959 Victor had also introduced the Electrowriter to transmit handwritten pages, the first major innovation since the telautograph. In 1961 Victor and Felt had merged. In 1965 the new Victor Comptometer Corporation released the Victor 3900, an electronic calculator with a small CRT display that incorporated General Microelectronics' MOS chips, i.e. the first MOS-based calculator (a rather cumbersome machine that did not sell well).

In 1964 another group at Fairchild, led by Rex Rice, developed the DIP ("dual in-line package") design that would remain popular for decades: consists in two rows of electrical pins under a rectangular ceiling, similar to the rows of columns supporting a Greek temple. This made it easier to insert electronic components into circuit boards or into sockets.

Ironically, Sylvania had pioneered TTL and Fairchild had introduced DIP but Texas Instruments was the company that reaped the benefits: in 1966 they introduced a low-cost TTL family in a user-friendly DIP, the SN7400 series, that assured their supremacy into the 1970s.

Lee Boysel, a young Michigan physicist working at Douglas Aircraft in Santa Monica, was one of the people who learned about MOS from Wanlass (in 1964). After working on MOS for one year at IBM's Alabama laboratories, Boysel was hired by Fairchild in 1966. Boysel then perfected a four-phase clocking technique to create very dense MOS circuits.

Federico Faggin, originally hired by Fairchild in Italy and relocated to their Palo Alto labs in 1968, invented silicon-gated MOS transistors instead of the aluminumm-gated transistors that were commonplace. By making both contacts and gates out of silicon, the manufacturing process was much simplified, and therefore it was cheaper to pack a greater number of transistors into a chip. This was another step towards the exponential growth in chip density. The first silicon-gate integrated circuit was released in October 1968. By the end of the decade, the area between Stanford and San Jose was home to several semiconductor companies, most of them spinoffs of Fairchild, such as: Amelco (a division of Teledyne), co-founded in 1961 by three Fairchild founders including Jean Hoerni, that commercialized an analog integrated circuit; Molectro, founded in 1962 as Molecular Science Corporation by James Nall of Fairchild, that in 1965 hired the two Fairchild geniuses, Bob Widlar and Dave Talbert and also made analog integrated circuits, and that was acquired in 1967 by East-Coast based National Semiconductor (controlled by pioneer high-tech venture capitalist Peter Sprague) that eventually relocated to Santa Clara (in 1968) after "stealing" many more brains from Fairchild (Charlie Sporck, Pierre Lamond, Don Valentine) and GMe (Regis McKenna); General Microelectronics (GMe), that developed the first commercial MOS integrated circuits and in 1966 was bought by Philco; Intersil, started in 1967 by Jean Hoerni to produce low-power CMOS circuits; and Monolithic Memories, founded in 1968 by Fairchild's engineer Zeev Drori. And there were the grandchildren, such as Electronic Arrays, founded in 1967 in Mountain View by Jim McMullen with people from General Microelectronics and Bunker Ramo. The genealogical tree of Fairchild's spin-offs was quite unique in the world.

In 1964 the head of Sylvania's Electronic Defense Lab (EDL), Stanford alumnus Bill Perry, took most of his staff and formed Electromagnetic Systems Laboratory (ESL) in Palo Alto, working on electronic intelligence systems and communications in collaboration with Stanford and in direct competition with his previous employer. His idea was to embed computers in these systems, something that had become feasible thanks to Fairchild's integrated circuits. By turning signals into digital streams of zeros and ones, ESL pioneered the field of digital signal processing, initially for the new satellite reconnaissance systems designed by Bud Wheelon, a former Stanford classmate who in 1962 had been appointed director of the Office of Scientific Intelligence at the CIA. The semiconductor industry experienced a rapid acceleration towards increased power, smaller sizes and lower prices. In 1965 in a famous article ("Cramming more Components into Integrated Circuits") Gordon Moore predicted that the processing power of computers would double every 12 months (later revised to 18 months). This came to be known as "Moore's Law" and it would hold for at least four decades.

The military was clearly a fundamental enabler of the semiconductor industry. The transistor technology was too expensive and unstable to be developed without a long-term investor. The Cold War turned out to be even more beneficial than World War II for the high-tech industry, as weapons were becoming more and more "intelligent" and a vast apparatus of intelligence and communications was being set up worldwide. The various military agencies of the USA served as both generous venture capitalist and inexpensive testbed. In 1965 Lockheed's Missiles Division in the Bay Area employed 28,000 employees versus Fairchild's 10,000: the future Silicon Valley was largely subsidized by government agencies. It was the demand from the military-industrial establishment and NASA's space program that drove quantities up and prices down so that Moore's Law could materialize.

|

Desktop and Pocket Calculators(Copyright © 2016 Piero Scaruffi)

The first electronic desktop calculator had been introduced in 1961 by Bell Punch in Britain, the ANITA MK, designed by Norbert Kitz, who had worked with Turing on the Pilot ACE, but it still used vacuum tubes. The ANITA (A New Inspiration To Arithmetic) was marketed through Bell Punch's Sumlock Comptometer division. Bell Punch introduced its first electronic calculator made with integrated circuits in 1969, the ANITA 1000.

In June 1963 Friden demonstrated its transistorized calculator EC-130, the first calculator to use Reverse Polish Notation, but not programmable.

One month later (July 1963) Boston-based Mathatronics demonstrated the most user-friendly of this generation of electronic desktop calculators, and it was also programmable: the Mathatron. It was the brainchild of William Kahn (who had worked at Honeywell's computer division Datamatic on Honeywell's first transistorized computer, the H-800, and then at Raytheon) and of David Shapiro, a former Raytheon engineer.

The Compet CS-10A introduced in 1964 by Hayakawa Electric (later renamed Sharp) was electronic (germanium transistors made in Japan) but not programmable, and ditto for the WS-01 introduced also in 1964 by Wyle Laboratories in Los Angeles (a contractor for the aerospace industry and the military). In 1964 An Wang in Boston introduced its first electronic calculator, the LOCI (Logarithmic Calculating Instrument), made of 1,275 transistors. The LOCI II of 1965 was also programmable but was more of a minicomputer than a desktop machine. In 1966 Wang introduced a smaller machine based on the LOCI, the Wang 300, containing about 300 transistors.

In 1965 Italian computer manufacturer Olivetti introduced a programmable electronic desktop computer, the P101, designed by PierGiorgio Perotto, the only rival of the Mathatron in terms of programmability.

In 1968 HP came out with the scientific calculator 9100A that used Reverse Polish Notation. None of these calculators used integrated circuits.

Crowning this race to miniaturization, in 1967 Jack Kilby at T.I. developed the first hand-held calculator. It was battery-powered and built with integrated circuits, but not sold in large quantities. The first mass-produced desktop (if not hand-held) calculator that incorporated integrated circuits was made by Sharp in 1969, the QT8-D, using Rockwell integrated circuits.

Texas Instruments provided the integrated circuits for Canon's hand-held, battery-powered calculator Pocketronic, introduced in 1970.

Japan sped ahead in the market for hand-held calculators. Sanyo introduced the ICC-82D (Integrated Circuit Calculator), built using Sanyo's proprietary integrated circuits, in May 1970; and Sharp unveiled the EL-8 in December 1970 using again Rockwell integrated circuits Mostek, founded in 1969 by former Texas Instruments employees, introduced the first "calculator-on-a-chip" integrated circuit, the MK6010, in November 1970 that contained more than 2,100 transistors implementing the logic for a four function 12-digit calculator. It had been commissioned by the Japanese company Busicom (founded in 1945 as Nippon Calculating Machine to manufacture Odhner-type mechanical calculators). Busicom immediately incorporated Mostek's chip in its pocket calculator LE-120A "Handy" (February 1971).

In 1970 Electronic Arrays introduced its own chipset for calculators and in 1971 its subsidiary International Calculating Machines (ICM) introduced the calculator ICM 816, followed by Sony's ICC-88 (the first time that Sony didn't use a in-house chipset) and at the end of the year MITS (Micro Instrumentation and Telemetry Systems) of New Mexico came out with the MITS 816.

As calculators adopted integrated circuits, the sizes shrank rapidly and the prices plummeted even more rapidly, leading to the Bowmar 901B "Brain" of 1971 (that used the Klixon keyboard built by Texas Instruments for use with its integrated circuits) and to the HP-35 of 1972 (that used a chipset made by Mostek).

Texas Instruments entered the hand-held calculator market only in 1972 with the TI-2500 (the "Datamath"), that used T.I.'s own "calculator-on-a-chip", the TMS1802NC.

By then Sharp was ready to introduce the EL-801 (August 1972), the first calculator to employ CMOS integrated circuits (made by Toshiba).

In 1972 several other small calculators built with integrated circuits debuted. Casio (a Japanese manufacturer of mechanical calculators founded in 1946 by Tadao Kashio) made the Casio Mini CM-601, using integrated circuits by Hitachi and NEC. Commodore, a manufacturer of typewriters originally founded in 1954 in Toronto (Canada) by Polish-Canadian Jack Tramiel, made the Minuteman 1, a semi-clone of Bowmar calculators replete with the "Klixon" keyboard, using integrated circuits by MOS Technology, an entity created in 1969 in Pennsylvania by Wisconsin's industrial manufacturing firm Allen-Bradley. The revolution in pocket calculators came at just about the right time to save the industry of integrated circuits: military applications had made the integrated circuit cheap enough for commodities and now commodities started driving the integrated-circuit industry. The main beneficiaries were Texas Instruments and Rockwell, the Pittsburgh-based company that in 1967 had absorbed North American Aviation and Autonetics, and that had become a major manufacturer of integrated circuits. |

The Minicomputer(Copyright © 2016 Piero Scaruffi)

In 1965 the Digital Equipment Corporation (DEC) of Boston unveiled the mini-computer PDP-8. Designed by Gordon Bell and Edson DeCastro, taking inspiration from Cray's CDC 160 and Wes Clark's LINC, it was much smaller and cheaper than an IBM mainframe.

More importantly, its elegant and compact modular configuration was easy to install and move around. And it was very cheap at $18,000. The input device was a Teletype ASR-33, whose keyboard included the CTRL and the ESC keys. There were no punched cards. DEC adopted ASCII, which would remain the code of choice for minicomputers. Its instruction set was rather limited compared with the IBM mainframes but that also meant that the PDP-8 could be easily programmed for many different tasks. The only problem with the PDP-8 was that it used a word of 12 bits and used 6 bits to represent a character, as opposed to the more elegant 32-bit word and 8-bit code of the IBM/360 Programming a PDP-8 still required computer skills that most users did not have, but the PDP-8 proved that an economic architecture could be very successful as long as the users were motivated to create applications. DEC supported DECUS, the user group of DEC users founded in 1961 by Edward Fredkin, which pioneered "freeware", the idea of free software shared by users.

DEC would end up selling 50,000 units of this computer, especially after later models (namely, the PDP-8/I and PDP-8/L) used integrated circuits. The success of this model, especially for industrial applications, launched Massachusetts' Route 128 around Boston as the main technological hub of the world (Silicon Valley was still small by Boston's standards). Meanwhile the PDP-6 acquired time-sharing software that created another large market for DEC, especially after it was repackaged as the improved PDP-10 in 1966. The OEM industry boomed. DEC, being tied to the research labs of the MIT (and its student life) rather than to its own corporate research labs (like IBM and the dwarves), relished a casual office environment (and dress code) instead of the formalities of the computer giants. The PDP became popular in universities all over the USA and Europe. A generation of students grew up programming PDPs. In 1965 a Boston neighbor of DEC's, Computer Control Company (CCC or3C), founded in 1953 by Raytheon's physicist Louis Fein (who had designed the RAYDAC), introduced the first 16-bit minicomputer, the Digital Data Processor 116 (DDP-116), designed by Gardner Hendrie. CCC was purchased by Honeywell in 1966. DEC itself spawned several companies, notably Data General, founded in 1969 by Edson DeCastro (the brain behind the PDP-8). Its Nova introduced an elegant architecture that fit a 16-bit machine on a single board (with a Texas Instruments 7483 ALU) and that only had one instruction format, each bit of which had meaning.

DEC's rival on the West Coast would soon be Hewlett-Packard, although it was still focused on instrumentation. In 1964 HP acquired Data Systems, a five-people Detroit company owned by Union Carbide that had a design for a minicomputer. In November 1966 the HP 2116A was demonstrated: the second 16-bit minicomputer to be available commercially, built with integrated circuits from Fairchild and memory chips from Ampex. It was marketed as an "instrumentation computer" and boasted interfaces for more than 20 laboratory instruments.

|

The DRAM(Copyright © 2016 Piero Scaruffi)

The first semiconductor RAM, which displaced magnetic core, was static: it held the data as long as power was being supplied. Scientists at Fairchild introduced the first significant innovations since Jay Forrester's magnetic core of 1953: Robert Norman invented the static RAM in 1963, and John Schmidt designed a 64-bit MOS static RAM in 1964. But Fairchild did not turn these inventions into products. The first commercial static memories were introduced in 1965 by Signetics (an 8-bit RAM for Scientific Data Systems) and IBM (a 16-bit RAM for the /360 Model 95, a supercomputer customized for NASA's Goddard Space Flight Center and described as having "ultra-high-speed thin-film memories" for a total of one megabyte plus four megabytes of core memory). In 1966 Tom Longo, now at Transitron, built the TMC3162, a 16-bit memory for the Honeywell 4200 minicomputer, and this became the first widely available semiconductor (static) RAM. In 1966 an IBM researcher, Robert Dennard, built the first Dynamic RAM (DRAM) that needed only one transistor and one capacitor to hold a bit of information, thus enabling very high densities. It is called "dynamic" because it needed to be refreshed continuously. The combination of Kilby's integrated circuit and Dennard's dynamic RAM could potentially trigger a major revolution in computer engineering, because together the microchip and the micromemory made it possible to build much smaller computers. In 1968 Fairchild hired Tom Longo from Transitron to jumpstart their RAM business. Fairchild's first success with (static) RAM came only in 1968 when it delivered 64-bit MOS SRAMs to Burroughs. In July 1968 Robert Noyce and Gordon Moore left Fairchild Semiconductor and started Intel (originally Integrated Electronics Corporation) in Mountain View to build memory chips, another startup facilitated by Arthur Rock. The price of magnetic-core memories had been declining steadily for years, but Noyce and Moore believed that semiconductor memory, being capable of a lot more information in a small space, would become a very competitive type of computer memory. In 1968 Lee Boysel at Fairchild achieved a 256-bit dynamic RAM. Then he founded Four Phase Systems in 1969 (with other Fairchild employees as well as Frank Wanlass from General Instrument) to build 1024-bit and 2048-bit DRAMs (kilobits of memory on a single chip). Advanced Memory Systems, founded in 1968 not far from Intel by engineers from IBM, Motorola and Fairchild, introduced another early 1,024-bit DRAMs in 1969. In 1970 RAM made of magnetic cores was still much cheaper than RAM made of integrated circuits, but in that year Hua-Thye Chua at Fairchild designed the 4100, a 256-bit static RAM that was chosen by the University of Illinois, where Daniel Slotnick was working on an experimental supercomputer for DARPA, the Iliac IV, a massively multiprocessing computer with 64 parallel processing units. The Illiac IV (designed at the university, but built by Burroughs and delivered to NASA Ames in 1972) was the first computer with all-semiconductor memories and the publicity surrounding it contributed to kill magnetic cores. Hua-Thye Chua had shown that integrated circuits can be used to build memories that can contain significant amount of data.

Honeywell had jumped late into computers but had made some bold acquisitions and hired bright engineers. Bill Regitz was one of them, a specialist in core-memory technology from the Bell Labs. He became a specialist in MOS (Metal Oxide Semiconductor) technology and came up with an idea for a better DRAM (using only three transistors per bit instead of the four or more used by the previous mass-market DRAM chips like Fairchild's and Four Phase's). He shared that idea with Intel (and eventually joined Intel), that in 1969 had debuted with two static RAMs, the 64-bit 3101 and the 256-bit 1101, and the result was the Intel i1103, the first 1,024-bit DRAM chip, introduced in October 1970. Hewlett-Packard selected it for its 9800 series, and IBM chose it for its System 370/158. It became the first bestseller in the semiconductor business.

The first company to introduce dynamic RAM in a commercial computer was actually Data General, which in 1971 introduced the SuperNova SC, the first computer equipped with RAM made of integrated circuits instead of magnetic cores and logic circuit made of four Signetics 8260 ALUs. Coupled with the influence of the IBM/370 (that used integrated circuit), these events established the integrated circuit as the building block of electronic computers.

Before DRAMs, the semiconductor firms mainly made money by building lucrative custom-designed integrated circuits, usually for one specific customer. DRAMs, on the contrary, were low-cost and general-purpose; in other words: a commodity. The business model shifted dramatically towards being able to make and sell volumes of inexpensive chips, not one expensive system. Competition became much more intensive as more firms entered the fray leading to a constant downward pressure on prices. In 1969 Jerry Sanders, the marketing guru of Fairchild Semiconductor, founded Advanced Micro Devices (AMD), a firm that was initially staffed with former Fairchild employees and focused on logic chips, starting with the Am2501 logic chip in 1970, but in 1971 it entered the memory market with the Am3101, a 64-bit static RAM. By the end of 1970 the Santa Clara Valley, home to five of the seven largest US semiconductor manufacturers (Fairchild Semiconductor, Intel, Signetics, Four Phase, Advanced Memory Systems), had become a major center of semiconductor technology. However, core memory was still the memory of choice among large computer manufacturers, still accounting for more than 95% of all computers in the mid |

Augmented Intelligence(Copyright © 2016 Piero Scaruffi)Artificial Intelligence seemed to exert a deeper influence on philosophy than on computer science (for example, Hilary Putnam's 1960 essay "Minds and Machines", arguably the manifesto of Computational Functionalism) and certainly inspired futurists such as the British mathematician Irving-John Good who in 1963 speculated about "ultraintelligent machines" (what came later known as the "singularity"). In 1965 Herbert Simon predicted that "machines will be capable, within 20 years, of doing any work a man can do" (Hubert Dreyfus at RAND Corporation responded with a scathing attack, "Alchemy and Artificial Intelligence").

The school of neural networks was facing the obvious limitation due to the limited memory and power of computers. Nonetheless, in 1961 the British molecular biologist Donald Michie at the University of Edinburgh (who had worked with Alan Turing) used matchboxes to build MENACE (Matchbox Educable Noughts and Crosses Engine), a device capable not only of playing Tic-Tac-Toe but also of improving its performance, another implementation of "reinforcement learning" after Samuel's checkers-playing program. Simon's and Newell's logic-based approach, instead, was thriving: it didn't need big computers. In 1960 John McCarthy created the programming language that would become the epitome of Artificial Intelligence for more than two decades: LISP (List Processing). This was a mathematical feat that ignored the limitations of the hardware (McCarthy programmed it on an IBM 704). It was the first language to introduce conditionals ("if then else...") and services such as "garbage collection", and it blurred the distinction between compiler and interpreter because it had no real distinction between compile-time and run-time. By promoting functions to the status of data types just like integers and strings, LISP made almost everything a function (technically speaking, lambda expressions, quotes, and conditionals were not functions at all) and adopted recursion as a natural state of things (because functions can be functions of themselves).

In 1963 Peter Deutsch (a 17-year-old student at UC Berkeley) implemented LISP on a PDP-1. In 1963 John McCarthy moved to Stanford from the MIT and in 1966 he opened the Stanford Artificial Intelligence Laboratory (SAIL). Here another transplant from the East Coast, Herbert Simon's pupil Ed Feigenbaum, designed the first knowledge-based or "expert" system, Dendral (1965), an application of Artificial Intelligence to organic chemistry in collaboration with Carl Djerassi (one of the inventors of the birth-control pill). Unlike Simon's Logic Theorist, that was a general-purpose "thinker", Dendral focused on a specific domain (organic chemistry), just like humans tend to be experts only in some areas. This meant that Feigenbaum had to design an architecture around the domain's "heuristics", the "rules of thumb" that experts in that domain typically employ to make decisions.

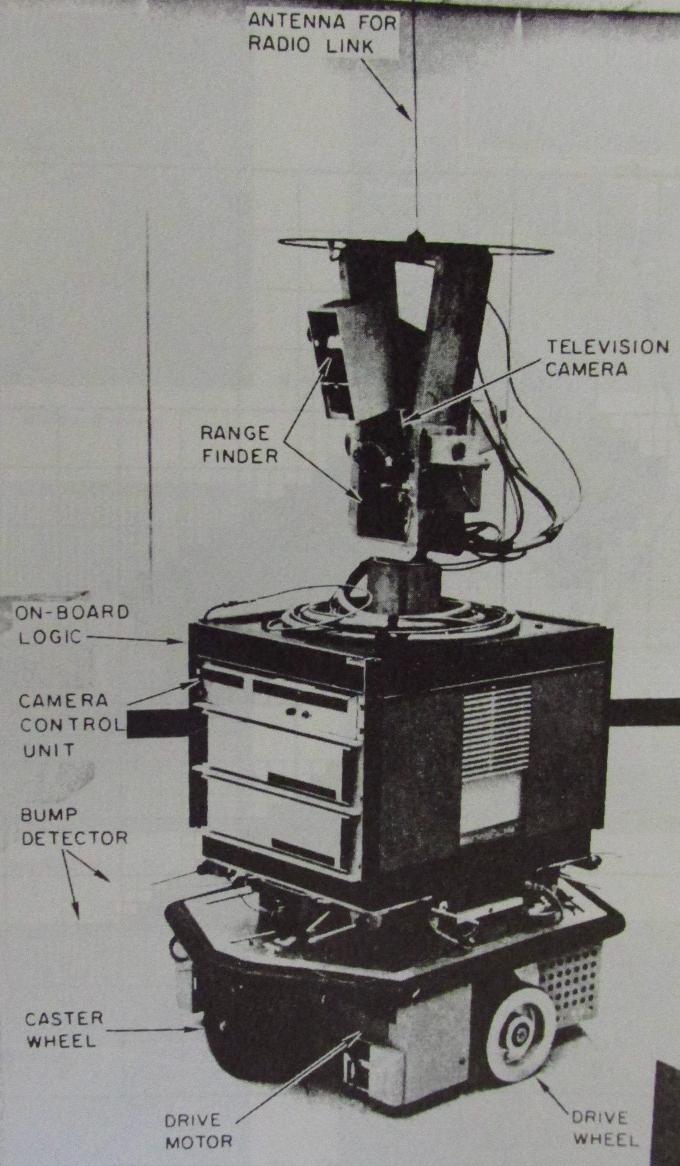

Stanford became one of the main centers of research on expert systems. In 1965 Charlie Rosen at nearby SRI started a project, almost entirely funded by the DARPA, combining all the fields of Artificial Intelligence, with the goal of building an autonomous mobile robot, a robot that came to be known as "Shakey the Robot" and was demonstrated in 1969 (embedding an SDS-940 computer).

In 1963 Edmund Berkeley's magazine Computers and Automation published the first "computer art", with a cover reproducing a printout by the Israeli-born MIT engineer Efraim Arazi.

In 1964 IBM introduced the first device capable of recognizing spoken words, although it was only the ten digits, "Shoebox".

Conversational agents such as Daniel Bobrow's Student (1964), Joe Weizenbaum's Eliza (1966) and Terry Winograd's Shrdlu (1972), both from the MIT, were the first practical implementations of natural language processing.

SRI was pursuing its own research on improving the intelligence of machines, notably within the team of Douglas Engelbart, who in 1963 had invented an input device called "the mouse". That was the first result in a a much bigger project funded since 1962 by NASA and ARPA to reinvent human-computer interaction. In December 1968, with one of the most celebrated "demos" of all time, Engelbart publicly demonstrated the NLS ("oN-Line System"). NLS, mostly programmed by Jeff Rulifson, featured a graphical user interface and a hypertext system running on the first computer to employ the mouse. Live from San Francisco, Engelbart operated an SDS 940 computer at the SRI, 70 kms away, over the Berkeley Timesharing System. NLS also implemented a sophisticated notion of user interface, that was specified by a grammar and compiled via Rulifson's compiler-compiler TreeMeta (1967). When NASA's Apollo program ended, and ARPA and NASA stopped funding Engelbart's team, SRI sold NLS to Tymshare.

Indirectly McCarthy and Engelbart started two different ways of looking at the power of computers: McCarthy represented the ideology of replacing humans with intelligent machines, whereas Engelbart, influenced by Norman Wiener's book "The Human Use of Human Beings" (1950), represented the ideology of augmenting humans with machines that can make them smarter. The MIT was somewhere in between. In 1967 Seymour Papert, a South African-born mathematician who had joined the MIT in 1963, developed Logo with Wally Feurzig's team at BBN that included Daniel Bobrow and Cynthia Solomon. Logo, influenced by by the theories of Swiss psychologist Jean Piaget, was conceived to teach concepts of LISP programming to children, and it was itself a dialect of LISP, written in LISP on a PDP-1. Logo created a mathematical playground where children could play with words and sentences. It was deployed in Lexington schools where children were able to do real programming.

In 1969 Stanford held the first International Joint Conference on Artificial Intelligence (IJCAI). Nils Nilsson from SRI presented Shakey. Carl Hewitt from MIT's Project MAC presented Planner, a language for planning action and manipulating models in robots. Cordell Green from SRI and Richard Waldinger from Carnegie-Mellon University presented systems for the automatic synthesis of programs (automatic program writing). Roger Schank from Stanford and Daniel Bobrow from Bolt Beranek and Newman (BBN) presented studies on how to analyze the structure of sentences. However, the most important event of 1969 was the publication of "Perceptrons", a text by Marvin Minsky and Samuel Papert that seemed to ridicule neural networks, and de-facto caused a sharp decline in research. Computers were becoming more affordable, but they were still impossible to operate. In 1967 Nicholas Negroponte, originally an architect with a vision for computer-aided design, founded the MIT's Architecture Machine Group to improve human-computer interaction, the same goal of Douglas Engelbart's team at the SRI.

Also in 1967 the artist Gyorgy Kepes, who had joined the MIT's School of Architecture and Planning in 1946, founded the MIT's Center for Advanced Visual Studies, a place for artists to become familiar with computer technology. The chimera of having computers speak in human language had been rapidly abandoned, but there was still hope that computers could help translate from one human language to another. After all, they had been invented to decode encrypted messages. The Hungarian-born Peter Toma started working on machine translation at Caltech in 1956 (on a Datatron 205) and then moved in 1958 to Georgetown University. He demonstrated his Russian-to-English machine-translation software, SYSTRAN, running on an IBM/360 in 1964. The ALPAC (Automatic Language Processing Advisory Committee) report of 1966 discouraged the USA from continuing to invest, so Toma completed his program in Germany, and returned in 1968 to California to found Language Automated Translation System and Electronic Communications (LATSEC) and sell SYSTRAN to the Armed Forces at the peak of the Cold War.

|

The Factory(Copyright © 2016 Piero Scaruffi)

Those were also the days that witnessed the birth of the first computer graphics systems for the design of parts, the ancestors of Computer Aided Design (CAD): one by Computervision, founded in 1969 near Boston by Marty Allen and Philippe Villers to design integrated circuits (originally with software running on a time-sharing mainframe), and one by M&S Computing (later renamed Intergraph), founded in 1969 in Alabama by a group of IBM scientists (notably Jim Meadlock) who had worked on NASA's "Apollo" space program, to work for NASA and the military (originally using a Scientific Data System Sigma computer). In 1971 a Silicon Valley firm, Calma, launched GDS, a CAD system for designing integrated circuits. The first PLC (Programmable Logic Controller), the Modicon 084, was introduced in 1969 by Richard Morley's Bedford Associates (later renamed Modicon from "Modular Digital Control"), founded a year earlier near Boston. Morley's PLC put intelligence into factory's machines and changed forever the assembly line and control of machines. The automation of industrial process became a problem of "programming", not of manufacturing. And in 1975 Modicon would introduce the "284", the first PLC driven by a microprocessor. |

The Arpanet(Copyright © 2016 Piero Scaruffi)

In 1964 the Lawrence Livermore Laboratories started setting up a network of supercomputers, such as the CDC6600s (acquired in 1964) and later the CDC7600s (acquired in 1969), that debuted in 1968 with the name Octopus, allowing access to more than one thousand remote terminals.

Another pioneering network was developed in 1968 at Tymshare in Los Altos: Tymnet, a circuit-switched network based on Scientific Data Systems' 940. It was coded by former employees of the Lawrence Livermore Laboratories such as Laroy Tymes. In 1968 the hypertext project run by Dutch-born Andries van Dam at Brown University, originally called Hypertext Editing System from the idea of his old Swarthmore College friend Ted Nelson, yielded the graphics-based hypertext system FRESS for the IBM 360. Influenced by Douglas Engelbart's NLS project at the SRI, FRESS featured a graphical user interface running on a PDP-8 connected to the mainframe. This system also debuted the "undo" feature, one of the simplest but also most revolutionary ideas introduced by computer science.

Paul Baran's old idea that a distributed network was needed to survive a nuclear war had led ARPA to launch in 1966 the project for one such network, assigned by Bob Taylor to the direction of MIT's scientist Lawrence Roberts, who had implemented one of the earliest computer-to-computer communication systems. In October 1969 the Arpanet was inaugurated with four nodes, three of which were in California (U.C. Los Angeles, in the laboratory of Leonard Kleinrock, whose 1962 thesis at the MIT had been on large data networks). Stanford Research Institute and UC Santa Barbara) plus one one at the University of Utah. Roberts wanted to leave the freedom to each node to choose whichever computer they preferred and commissioned a gateway computer from Boston's consulting firm Bolt Beranek and Newman (BBN). BBN delivered one Interface Message Processor (IMP) to each node. The IMPs communicated via data sent over ordinary telephone lines.

The first message on the Arpanet was sent on the 29th of October of 1969 at 22:30 from Kleinrock's building at UCLA, Boelter Hall (room 3420), by his student Charley Kline to Bill Duvall at SRI. Kleinrock's computer was an SDS Sigma 7, SRI's computer was an SDS 940. (The message was supposed to be "login" but only the first two letters were received, "lo", a humble beginning for the most influential network of all time). This was three months after Neil Armstrong had stepped on the Moon. Everybody knew of Armstrong's achievement, almost nobody heard of the Arpanet's achievement, but the latter would turn out to be much more consequential.

The Arpanet implemented Baran's original vision of 1962 for a "survivable" communications network: decentralization (so that no central command that the enemy can take out), fragmentation (so that each "packet" of the message contains the information required to deliver it), and relay (so that the packets are delivered by store and forward switching via any available path of nodes). Packet-switching is a technology that does not wed two nodes to each other but picks the route between source and destination dynamically. The telephone network, instead, is a circuit-switching network, in which a dedicated line is allocated between the two parties. Circuit-switching was more appropriate for voice (an analog signal that could hardly be divided into packets and reconstructed without a loss in quality) whereas packet-switching was more appropriate for data (easy to split and reconstruct without any loss of information). Of course, the packet-switching method (that wasted time splitting the message and scattering packets around the network) is slower than the circuit-switching method (that stayed on a dedicated line from beginning to end of the transmission), but the packet-switching method guarantees delivery of the whole message whereas the circuit-switchin method might lose something in the transmission. Data can live with a delay of minutes and even hours, but not with a loss of information. A phone call, on the other hand, can live with the loss of a few milliseconds of voice but not with a delay. Kleinrock had developed the mathematical theory of "message switching", but that is not quite Baran's "packet switching". Donald Davies, on the other hand, had indeed built a packet-switching network ten years earlier. Built into the Arpanet's engineering principle was an implicit political agenda that would surface decades later: the "packets" represent an intermediate level between the physical transport layer and the application that reads the content, i.e. the packets are agnostic about the content they are transporting. All packets are treated equal regardless of whether they are talking about an earthquake or a birthday party. A computer in the network receives a packet and forwards it to the next computer in the route without knowing the importance of the message that will be reconstructed only at the terminal. No computer in the node can decide which packets are more important. The routing is "neutral": "net neutrality" was inherent in the design of any packet-switching network, unlike circuit-switching networks where the provider (e.g. AT&T) could easily assign better lines to higher paying customers. The Arpanet embodied a "distributed", not centralized, concept of computing. This network had no top-down hierarchy of control: every node had equal rights and duties, and the network simply reorganized itself whenever a new node was added. By 1971 the Arpanet had 15 nodes and nine of them were using PDP-10 computers.

The network truly picked up speed after ARPA demonstrated the Arpanet in 1972 at the first International Conference on Computer Communications in Washington: Bob Kahn of DARPA/IPTO connected 20 different computers. More than 100 universities joined the Arpanet in the next two years. Several other wide-area networks were being implemented, notably Alohanet, set up in 1971 by Stanford alumnus Norman Abramson at the University of Hawaii, which used radio signals instead of telephone cables.

Meanwhile, the time-sharing industry had popularized the concept of remote computing. For example, Ohio-based CompuServe, a 1969 spin-off of the University of Arizona's time-sharing system, made money by renting time on its PDP-10 midrange computers, a service that would be called "dial-up" service.

|

piero scaruffi | Contact/Email | Table of Contents

Timeline of Computing | Timeline of A.I.

The Technologies of the Future | Intelligence is not Artificial | History of Silicon Valley